Intel India hosted the first edition of its Artificial Intelligence developers conference aka AI DevCon in Bengaluru on 8 August, following a similar event that took place in the US. The idea of the AI DevCon is to bring key stakeholders in the fields of data science, machine and deep learning, application development and more to work towards making AI mainstream. Intel also used the occasion to talk about its end to end solutions across software, hardware and the ecosystem enabling an AI-first approach to doing things. In addition to the over 500 developers who attended the event, the AI DevCon also had sessions with Intel’s key industry partners who gave real-life examples of using AI and deep learning in their day-to-day operations. Verticals such as e-commerce, healthcare, software consulting, telecom infrastructure and more were covered over the day-long sessions. [caption id=“attachment_4961011” align=“alignnone” width=“800”] Gadi Singer, vice president and architecture general manager, AI at Intel. Image: Intel[/caption] Evolution of deep learning In a lot of the artificial intelligence related conversations that we hear about these days, the underlying technology has a lot to do with not just machine learning, but deep learning as well, which goes much beyond task-specific machine learning. Google Photos making a timeline album of your kids from over the years, Microsoft Translate identifying images of foreign text and showing you a translated version on your phone screens when you point your camera to said foreign text, Amazon Echo answering your queries using just voice commands, these are all examples of deep learning. Gadi Singer, vice president and architecture general manager, AI at Intel, in his keynote, took us through the various life cycles of the development of AI and deep learning. Calling the 2014-15 era the birth of the deep learning tech, Singer said that around this time, technologies such as speech and image recognition got a big boost. Most of it was at an experimentation stage with stakeholders such as early adopters and innovators being the only movers and shakers in this field. This era involved machines being fed with lots of data and being able to recognise images or identify voice commands. “C++ and low-level languages were the only means, but that wasn’t scalable. Hardware-wise, GPU came on the same level as CPU,” said Singer. Nvidia, the gaming GPU giant, took early strides in this era thanks to its parallel processing units which were optimal for training machine learning models. Keeping the current trends in mind, Singer feels that 2019-20 is when deep learning will reach a level of maturity. Back in 2014-15, image recognition involved simple yes and no answers for recognising objects, whereas now we have 3D image-related medical databases where, for instance, potentially malignant cells are identified and necessary decisions are taken. These tasks are no more in the experimentation phase but actually are employed for real-world use case scenarios. [caption id=“attachment_4957721” align=“alignnone” width=“1280”]

The inaugural Intel AI DevCon in India took place in Bengaluru on 8 August[/caption] “It is much easier for data scientists to focus on work in AI and not worry with programming for complex AI work. The real-life inferences are more important than mere training,” said Singer. The emergence of machine learning frameworks further led to the democratisation of data science, according to Singer, which let developers develop models and focus on AI rather than spend time in trying to program a complex model. The use of open source machine learning frameworks such as TensorFlow, Caffe2, Microsoft Cognitive Toolkit, and so on, has enabled quicker turnaround time for deep learning models and allowed AI to scale. According to Singer, the world is headed towards developing AI models which derive inference out of the data fed to the deep learning models. By this, he means AI models that help with decision making rather than merely using data to train AI models. To give an example, in the past a machine could only tell if the animal shown to it is a dog or not; whereas now the machine learning algorithms can tell you not just if it’s a dog, but which breed of dog it is. The scaling of underlying technology, training models, the ability to process that much data, has led to this revolution. In the fields of medicine, for instance, the data coming from a smartwatch or fitness app could enable a machine learning model to determine if the user is a heart patient or not. This ability to arrive at conclusions based on the available data is called inferencing. Addressing bias in AI While AI has been a value-add in a lot of areas, there have also been instances where AI has led to disastrous results. Many years back, the Google Photos algorithm was misidentifying

images of black couples as gorillas. It created a furore and Google had to issue an apology and eventually fix things. Unfortunately, AI models are only as good as the data that’s used to train them. There are global concerns about how the training data used for AI model training could include a bias which could lead to strange outcomes and could lead to discriminatory situations in real life. A white paper goes on to explain around 20 cognitive biases that can creep in as we train machine learning models to be predictive in nature. Bias creeping into an AI model can completely derail the inference derived by the model. Acknowledging this issue, Singer said that the introduction of bias, intentionally or unintentionally, reflects on the people who develop the AI systems. “The best way to quickly address the issue is to deal with the bias of the development team and to have a more diverse team in the first place. You need to work on the team that will eventually develop the AI system, as any inherent bias in any team member could manifest in the AI models,” said Singer, stressing on the fact that there need to have the right checks and balances in place. (Also Read:

**Artificial Intelligence has more pressing issues, such as biased learning models, which need solutions** **)** Intel’s tools for expanding AI usage in real life scenarios Intel spoke about providing an end-to-end solution to help with accelerating innovation in the field of deep learning technology. Recently, Intel even announced that it had sold $1 bn worth of AI focussed Xeon chips. Navin Shenoy, Intel’s data centre chief, said that the company has been able to modify its CPUs to become more than 200 times better at artificial intelligence training over the past several years. At the AI DevCon, Intel spoke about its entire range of hardware and software portfolio. Speaking about its hardware range, the chart below shows Intel’s portfolio, going from the multi-watt Xeon Platinum processors for data centres to milli-watt Intel Movidius chips focussing on vision processing. To be quite frank, it looks like Intel was trying to force fit many different kinds of processors for different workloads under the AI umbrella. The Xeon, Xeon Platinum line, Intel Core series and Iris Graphics have use cases with regular computing work and data centre related tasks. Yes, these processors fulfil the need for scaling, but calling a data centre processor an AI processor seems a bit of a stretch. You can drive any car on a race track, but that doesn’t mean that you’re driving a racing car. [caption id=“attachment_4957781” align=“alignnone” width=“1280”]

Intel processor portfolio from multi-Watt Xeon line to milli-Watt Movidius chipset. Image: tech2[/caption] Intel also spoke about the upcoming Nervana Neural Network Processor (NNP) L-1000 aka SpringCrest, which is expected to be released in 2019. This is the second generation of Intel processors which have been built specifically for AI tasks. Using an AI processor tag here makes a lot of sense, or even with the Movidius chipset, whose Movidious Compute Stick does specific tasks at increased speeds. Nervana is Intel’s answer to beat the Nvidia hegemony which is presenting its GPU architecture as the ideal way to train deep learning models. Amir Khosrowshahi, vice president and general manager of the Artificial Intelligence Product Group (AIPG), also announced something called the N-Graph Compiler. So far, every edge compute-device which is processor based has a one-to-one relationship with ML frameworks. Due to the expanding hardware portfolio, developers can sometimes get overwhelmed when programming them to interface with certain ML frameworks. “To hide the complexity of the hardware from developers, we need a middleware that can handle software and hardware compatibility. NGraph acts as that intermediary. NGraph is a library for machine learning, on top of which you can write your software,” said Khosrowshahi. [caption id=“attachment_4957711” align=“alignnone” width=“1280”]

Intel NGraph deep learning compiler. Image: tech2[/caption] Where’s India on the AI map? Intel India is working with academia, the government and the private sector to build a trained AI workforce. According to Intel India managing director Prakash Mallya, Intel has trained over 99,000 developers, students and teachers and has tie-ups with over 50 universities, 45 Intel Student ambassadors and has over 20 partners on its Intel AI Builder platform. “This streak will continue as we engage with local platforms such as Analytics Vidhya to train data scientists and developers,” said Mallya. Intel India will also be collaborating with companies such as Philips, Arya.ai and Mphasis NEXT Labs to deploy their AI portfolio in local ecosystems. [caption id=“attachment_4957731” align=“alignnone” width=“1280”]

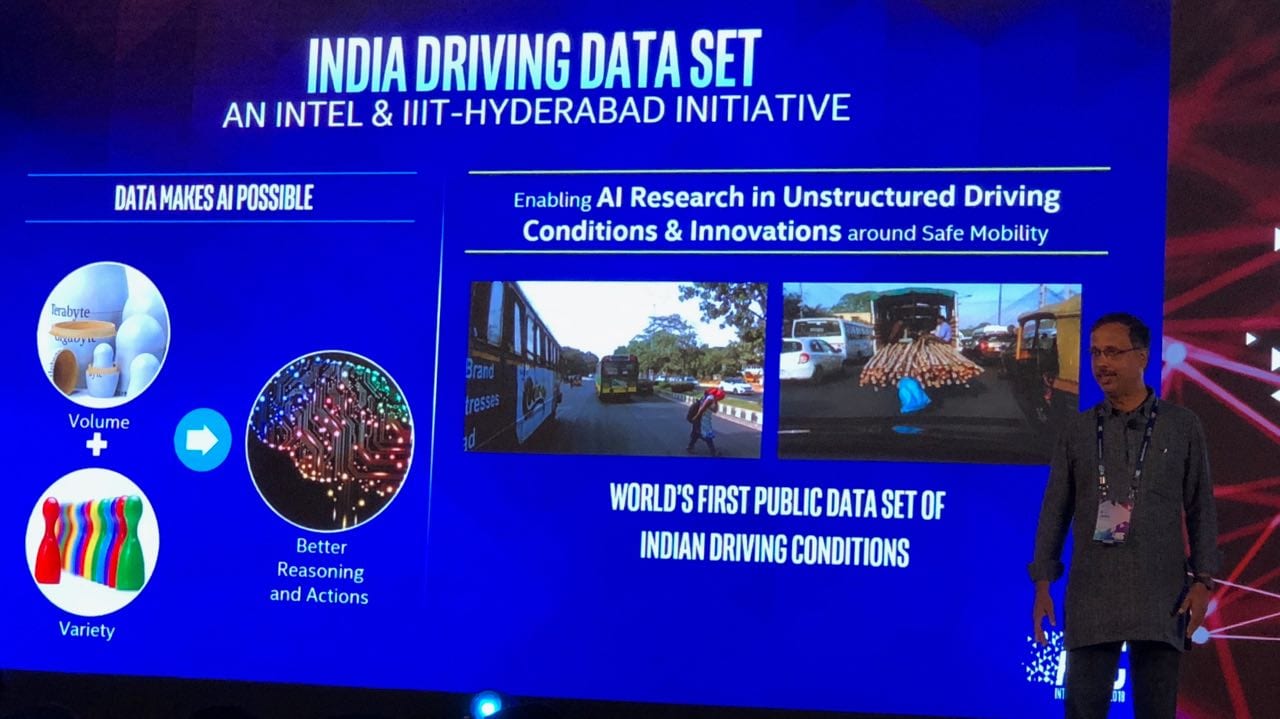

Intel is working with IIIT Hyderabad to study unstructured driving data. Image: tech2[/caption] According to Mallya, the sheer diversity presented by India gives AI models a variety of data sets to work with. “If an AI model can work successfully in India, it can work easily anywhere else in the world,” said Mallya. An interesting case study that was presented on stage was Intel’s partnership with the Indian Institute of Information Technology (IIIT) Hyderabad, which is using unstructured data, such as Indian driving and road conditions, to create a public data set of Indian driving conditions. This data set is expected to help in future inference algorithms. The ambition is to use this data to assist an autonomous vehicle in navigating Indian roads. I, for one, will reserve my thoughts on this for when I actually see even a trial run of an autonomous vehicle in India. The India roadmap for Intel’s plan is heavily reliant on its partners, and Intel is leaving no vertical untouched. Thanks to its legacy in the computing business, Intel has a range of clients to work with. According to Khosrowshahi, whom tech2 had spoken in the past, Intel works with its clients (most of whom aren’t really deep-learning companies) to solve issues that they are presented with. “They can discover ways of improving their processes, but it will take them much longer. Whereas since we already have the underlying technology and have made all the possible mistakes, it is just easier for our clients to let us provide the solutions,” said Khosrowshahi. At AI DevCon we saw some of the clients from the private sector provide their case studies, prominent among them being Philips, Wipro Holmes, Mphasis NEXT and so on. Intel was tight-lipped when asked if it was working on any government-related projects, however.

Intel is working with IIIT Hyderabad to study unstructured driving data. Image: tech2[/caption] According to Mallya, the sheer diversity presented by India gives AI models a variety of data sets to work with. “If an AI model can work successfully in India, it can work easily anywhere else in the world,” said Mallya. An interesting case study that was presented on stage was Intel’s partnership with the Indian Institute of Information Technology (IIIT) Hyderabad, which is using unstructured data, such as Indian driving and road conditions, to create a public data set of Indian driving conditions. This data set is expected to help in future inference algorithms. The ambition is to use this data to assist an autonomous vehicle in navigating Indian roads. I, for one, will reserve my thoughts on this for when I actually see even a trial run of an autonomous vehicle in India. The India roadmap for Intel’s plan is heavily reliant on its partners, and Intel is leaving no vertical untouched. Thanks to its legacy in the computing business, Intel has a range of clients to work with. According to Khosrowshahi, whom tech2 had spoken in the past, Intel works with its clients (most of whom aren’t really deep-learning companies) to solve issues that they are presented with. “They can discover ways of improving their processes, but it will take them much longer. Whereas since we already have the underlying technology and have made all the possible mistakes, it is just easier for our clients to let us provide the solutions,” said Khosrowshahi. At AI DevCon we saw some of the clients from the private sector provide their case studies, prominent among them being Philips, Wipro Holmes, Mphasis NEXT and so on. Intel was tight-lipped when asked if it was working on any government-related projects, however.

The sheer diversity presented by India gives AI models a variety of data sets to work with.

Advertisement

End of Article

)

)

)

)

)

)

)

)

)