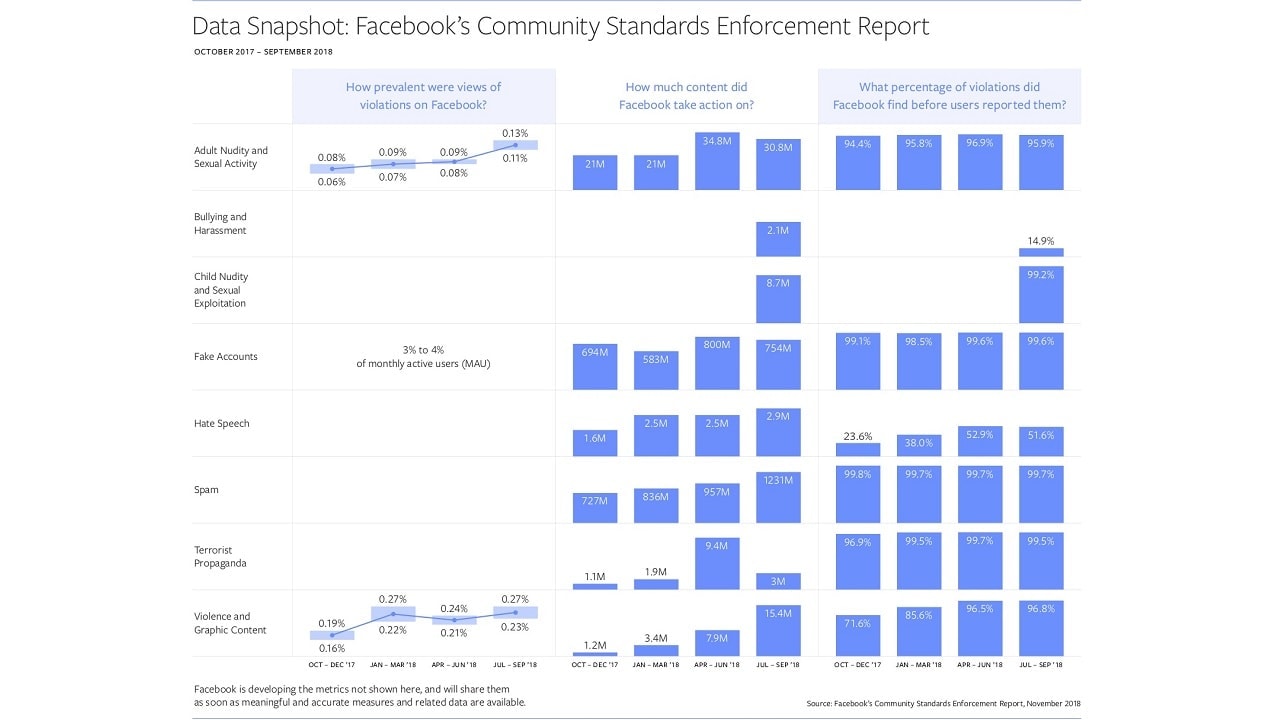

Amid the **uproar** that the scathing **report** by The New York Times has created, Facebook has released its latest transparency report, which highlights a big jump in spam and violent content takedowns, some advances in proactively identifying hate speech, and the first numbers for bullying, harassment, and child sexual exploitation pulldowns. Facebook claims that their proactive detection rate for violence and graphic content has increased 25 percent points — from 72 percent to 97 percent. The company also says that it takes down a lot of content from the platform before it even reaches any user. “Since our last report, the amount of hate speech we detect proactively, before anyone reports it, has more than doubled from 24 percent to 52 percent.” Per the report, if compared to the transparency report in 2017, Facebook took down way more pieces this year between July and September. [caption id=“attachment_4723021” align=“alignnone” width=“1024”]  Facebook. Reuters.[/caption] Reportedly, it removed some 30.8 million pieces on adult nudity and sexual activity, 2.1 million on bullying and harassment, 8.7 million on child nudity and sexual exploitation, 754 million fake accounts, 2.9 million pieces on hate speech, 1,231 million spam posts, 3 million posts that reflected terrorist propaganda, and 15.4 million pieces that carried violent or graphic content. By far, spam is the largest category, and takedowns under it have consistently grown every quarter. Reportedly, where Facebook took down 1.23 billion pieces of spam in July-September 2018 quarter, in previous quarters it has taken down 957 million, 836 million, and 727 million posts. [caption id=“attachment_5561261” align=“alignnone” width=“1280”]  Community Standard Enforcement report. Credit: Facebook[/caption] Further, the report also shows that Facebook took down more fake accounts in Q2 and Q3 than in previous quarters, 800 million and 754 million respectively. Facebook explains that most of these fake accounts were the “result of commercially motivated spam attacks trying to create fake accounts in bulk”. However, Facebook says that because it is able to remove most of these accounts “within minutes of registration”, the prevalence of fake accounts on Facebook remains steady at three to four percent of monthly active users. In addition to that, this time, Facebook has added two new categories to the report — bullying and harassment. Facebook says that in the last quarter, it took action on 2.1 million pieces of content that violated policies for bullying and harassment, of which 15 percent were removed before they were reported.

Facebook claims that their proactive detection rate for violence and graphic content has increased 25 percent.

Advertisement

End of Article

)

)

)

)

)

)

)

)

)