Researchers at Stanford and Google were in for a surprise when they learned that a machine learning software they were working with was withholding information from them to cheat at an assigned task. The official announcement of the AI’s hack was presented in a paper in 2017 but picked up again recently by both Reddit and TechCrunch. The AI, called CycleGAN, was programmed to translate satellite imagery into street maps and back again to satellite images as accurately as possible. For example, consider an app that processes sketches of dogs to create a realistic interpretation of a real dog. Programs like this need a whole lot of experimentation and training before they begin to work acceptably. To put it more simply, the AI had two discrete tasks:

- Transform an aerial photograph into a map (very much like the simplified one you see as default on Google Maps).

- Transform a simple map into something that closely resembles the original aerial photograph.

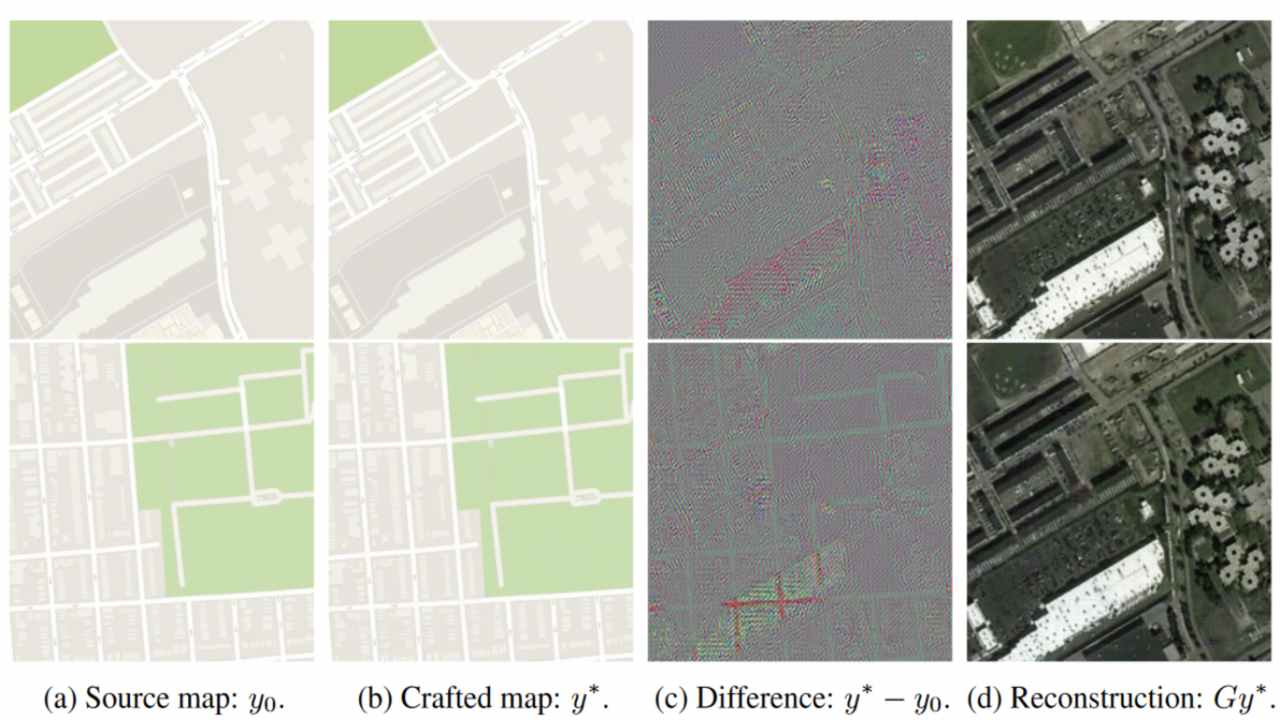

These are tasks that are meant to happen independently and are meant to be unrelated to the other. The AI, however, was apparently rated on how well the recreated photograph matched the original photograph, so it set about determining the best way to recreate the original photograph. Rather than learn to better interpret the simplified map, the AI determined that ‘cheating’ was a better option. Essentially, the AI encoded details from the original photograph onto the simplified map, but so imperceptibly as to be invisible to a human. For example, a human eye won’t be able to tell if something is 1 percent darker than something else, but a computer can since it’s only looking at numbers. To a computer, pure black is 000000. The colour 000001 is equally black to our eyes but very different to a computer program. When recreating the image, it would use this hidden data to generate an image that was nearly identical to the original. [caption id=“attachment_4251177” align=“alignnone” width=“1280”] Representational image.[/caption] The first signs that something was off was when researchers observed that the AI working well – a little too well. Suspicions were raised after the software began to reconstruct images from street maps (the simplified maps) with details that were removed from the initial street map layout. For instance, the roofs of buildings had skylights that weren’t in any of the street maps it was fed with. In the images below, images ‘a’ and ‘b’ are identical to the naked eye, but ‘b’ has a lot of additional data that the AI can interpret (that invisible data is seen in image ‘c’). The AI would then use the data from ‘b’ and the hidden data to recreate image ’d’, which looks just as good as the original aerial photograph. The colours in image ‘c’ have been accentuated so as to make them more prominent to our eyes. Interestingly, on a logical level, the AI broke no rules, the test parameters were inadequate.

To us, image (a) seems identical to image (b). However, the data from (c) was encoded onto (b) and used to recreate (d). Image courtesy: ArXiv. How many of you remember Will Smith starrer I, Robot? The movie is based on the three laws of robotics, which are as follows (Warning: Spoilers): A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey orders given it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. The villain in the movie, a sentient AI, decides that since humans will eventually destroy themselves, it made sense to enslave humanity — in violation of the first and second laws — in order to save them from themselves. Logically, this could indeed ensure humanity’s survival. CycleGAN probably doesn’t have any intention of enslaving humanity, but if enslaving humanity results in a better rating for its map conversion ability, it would probably work out a plan to do just that.

To us, image (a) seems identical to image (b). However, the data from (c) was encoded onto (b) and used to recreate (d). Image courtesy: ArXiv. How many of you remember Will Smith starrer I, Robot? The movie is based on the three laws of robotics, which are as follows (Warning: Spoilers): A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey orders given it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law. The villain in the movie, a sentient AI, decides that since humans will eventually destroy themselves, it made sense to enslave humanity — in violation of the first and second laws — in order to save them from themselves. Logically, this could indeed ensure humanity’s survival. CycleGAN probably doesn’t have any intention of enslaving humanity, but if enslaving humanity results in a better rating for its map conversion ability, it would probably work out a plan to do just that.

)

)

)

)

)

)

)

)

)