In a move that flew under the radar, NVIDIA has quietly rolled out a new large language model (LLM) called Llama-3.1-Nemotron-70B-Instruct.

This latest AI creation, designed with advanced features, is said to surpass some of the biggest names in the industry, including OpenAI’s GPT-4o and Anthropic’s Claude 3.5 Sonnet, based on key benchmarks.

Unlike its high-profile rivals, NVIDIA’s new LLM focuses on lightweight efficiency while still packing a punch. The model offers a streamlined design that makes it more efficient than GPT-4o Mini or Meta’s Llama models, despite boasting 70 billion parameters.

NVIDIA fine-tuned the model to deliver sharp, human-like responses to general queries and coding tasks, cementing its versatility and practical application.

Top-notch performance on benchmarks

The Llama-3.1 Nemotron-70B builds on Meta’s Llama 3.1 framework, which relies on transformer technology to deliver coherent and fluent language generation. What sets this model apart is its impressive performance on benchmark tests, where it earned top marks across multiple metrics.

It achieved scores of 85.0 on Arena Hard, 57.6 on AlpacaEval 2 LC, and 8.98 on GPT-4-Turbo MT-Bench, surpassing competitors such as GPT-4o and Claude 3.5 Sonnet.

This achievement is especially notable given the model’s size. While Nemotron-70B is relatively compact with 70 billion parameters, it has still outperformed much larger models, highlighting NVIDIA’s focus on efficiency without compromising quality.

Open-sourced for the AI community

NVIDIA has chosen to open-source the Nemotron model, alongside its reward model and training dataset, making them available on Hugging Face. The AI model is also accessible for preview on NVIDIA’s official website, giving developers a chance to explore its capabilities firsthand.

Impact Shorts

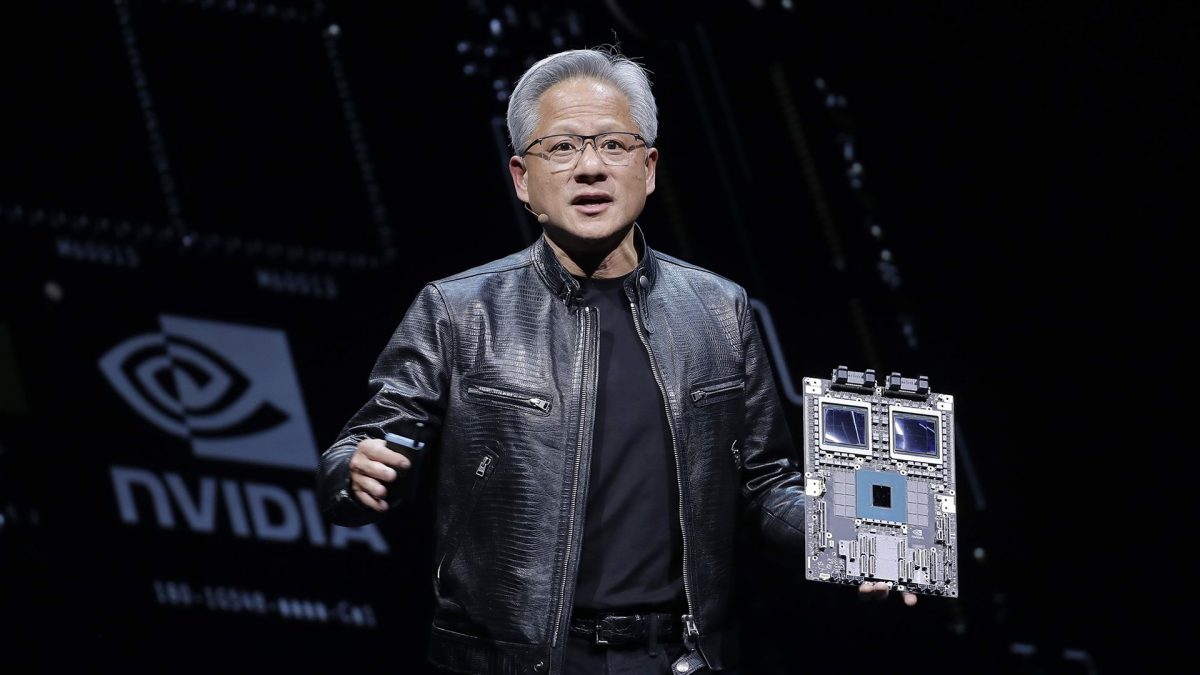

More ShortsWhile NVIDIA is renowned for its dominance in the hardware space, particularly with high-performance GPUs, this latest release shows the company’s growing influence in the AI landscape. The Nemotron-70B is a reminder that smaller, more efficient models can still compete with and, in some cases, outperform larger, more established models from rivals.

By keeping this release relatively low-key, NVIDIA may be signalling a shift towards making cutting-edge AI models more accessible and open to experimentation. As the AI space continues to evolve, NVIDIA’s new model underscores the importance of balancing power and efficiency in the race for AI supremacy.

)

)

)

)

)

)

)

)

)