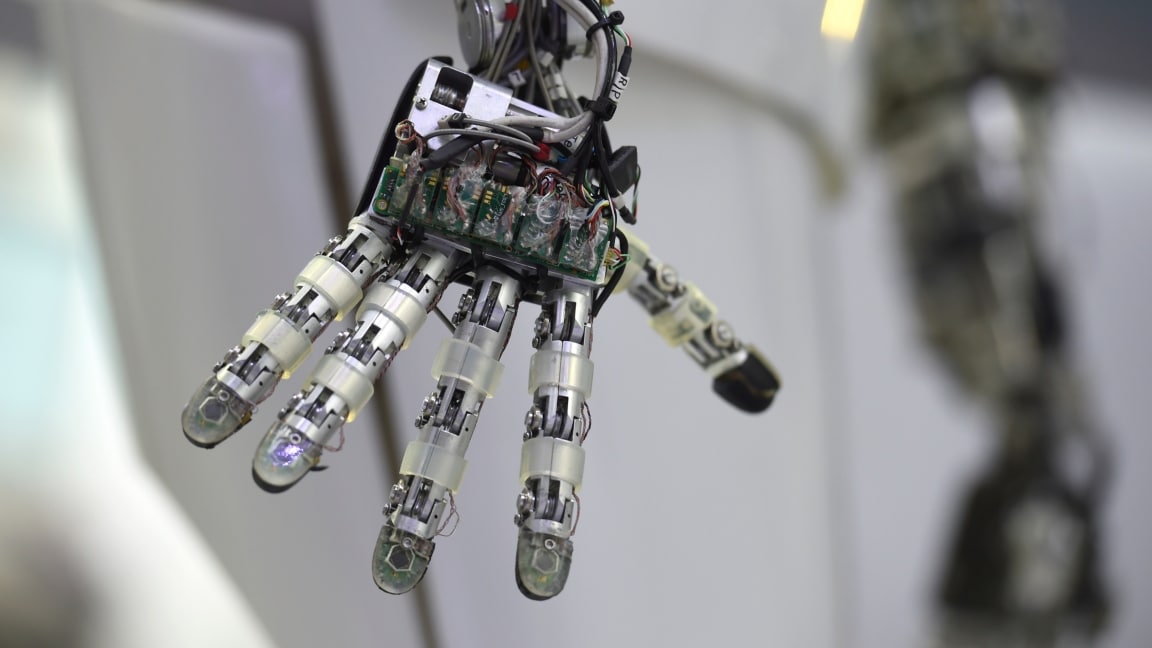

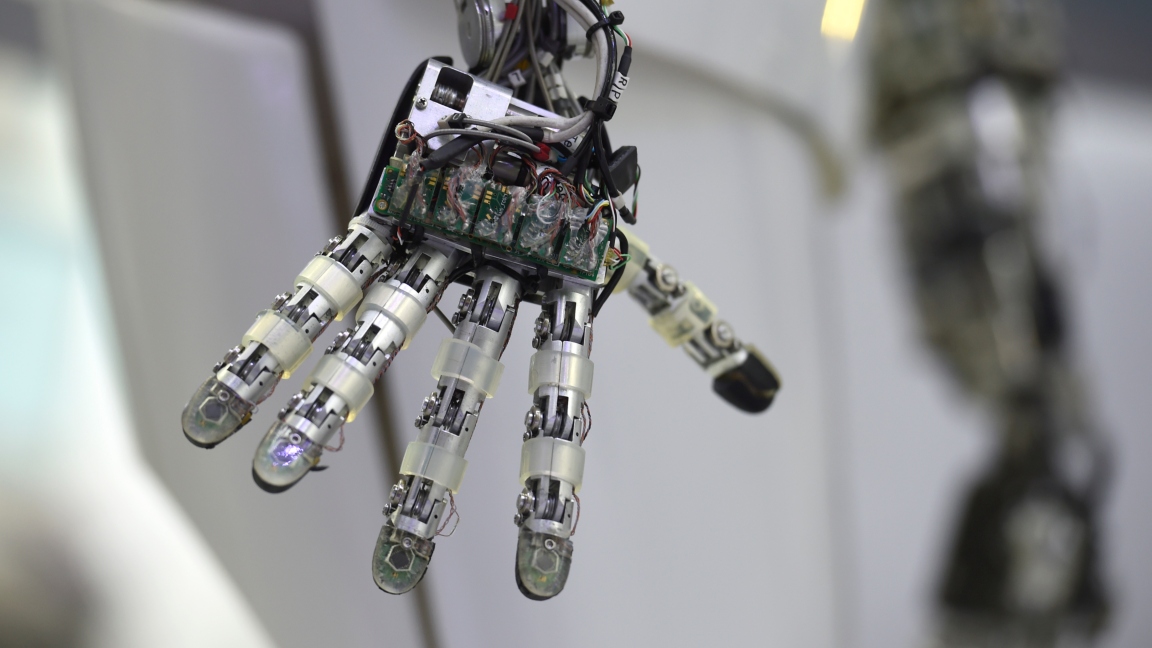

Backed by Tesla Inc founder **Elon Musk** , and **Silicon Valley** financier Sam Altman, researchers have found a novel way to use software to teach a human-like robotic hand new tasks, a discovery that could eventually make it more economical to train robots to do things that are easy for humans. **OpenAI** , a nonprofit **artificial intelligence** research group founded in 2015, has said on Monday that they have taught a robotic hand to rotate a lettered, multi-colored block until a desired side of the block faces upward. The task was quite simple. But the advance was in how the hand gained the skill: All the learning happened in a software simulation, and then transferred to the physical world with relative ease. [caption id=“attachment_4856101” align=“alignnone” width=“1152”]  Representational Image. Reuters[/caption] This solves a long-standing challenge for robotic hands, which look like the fist of a robot from the 1980s “Terminator” science fiction film. The hands have been commercially available for years but are difficult for engineers to program. Engineers can either write a specific computer code for each new task, requiring a pricey new program each time, or the robots can be equipped with software that lets them “learn” through physical training. Physical training takes months or years, and has problems of its own - for example, if a robot hand drops a workpiece, a human needs to pick it up and put it back. This, too, is expensive. Researchers have sought to chop up those years of physical training and distribute them to multiple computers for a software simulation that can do the training in hours or days, without human help. Ken Goldberg, a University of California Berkeley robotics professor who was not involved in the OpenAI research but reviewed it, called the OpenAI work released Monday “an important result” in getting closer to that goal. “That’s the beauty of having lots of computers crunching on this,” Goldberg said. “You don’t need any robots. You just have lots of simulation.” A key advance in the OpenAI research was transferring the robot hand’s **software learning** to the real world, overcoming what OpenAI researchers call the “ **reality gap** ” between the simulation and physical tasks. Researchers injected random noise into the software simulation, making the robot hand’s virtual world messy enough that it was not befuddled by the unexpected in the real world. “Now we’re looking for more complicated tasks to conquer,” said Lilian Weng, a member of the technical staff at OpenAI who worked on the research.

The robot hand was able to overcome what OpenAI researchers have called the “reality gap” between a virtual simulation and performing physical tasks

Advertisement

End of Article

)

)

)

)

)

)

)

)

)