On March 18, 2024, a whole new world was born—a world that was expected to be at least 15 to 20 years away. Nvidia, a global leader in artificial intelligence development, is the starship taking us there. Its CEO, Jensen Huang, is fast on his way to dethroning Elon Musk as our modern world’s Oppenheimer.

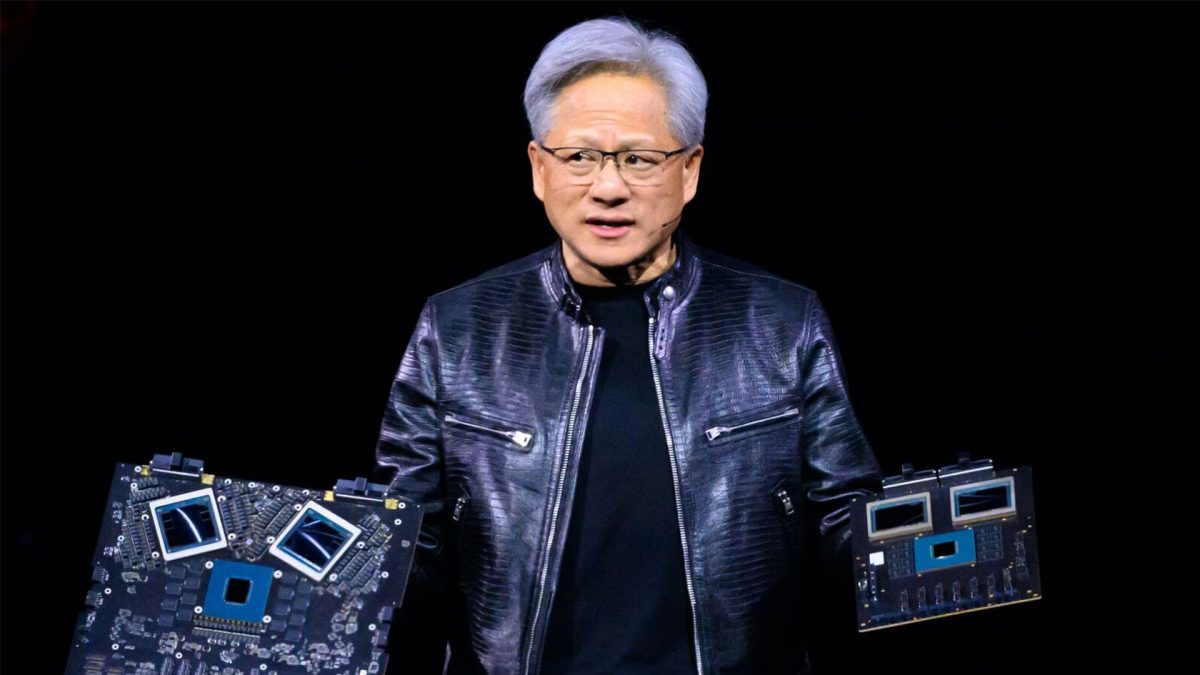

Taking a leaf out of tech’s ultimate showman Steve Jobs’ book, Nvidia’s leather jacket-clad CEO, Huang, walked onstage to deliver the keynote address at the company’s annual GTC (GPU Technology Conference) after an AI introductory spectacle that looked like something that could be played at the start of a post-apocalyptic video game.

“I hope you don’t think this is a rock concert,” were his first words on stage to a massive audience that filled the SAP Centre in San Jose, California, the US, on a dazzling spring day.

Nvidia, an American multinational corporation and technological company headquartered in Santa Clara, California, was founded in April 1993 and was then considered to be revolutionising the world of gaming. While most computer games of that era were CPU-based, Nvidia’s founders believed that for computer graphics to advance, a dedicated GPU would be required, and since then, after establishing itself as the number one graphics chip provider for gaming, Nvidia has expanded into high-performance computing and, of course, the buzzword for our times, AI, or artificial intelligence.

Today, it is the world’s third-most valuable company by market cap, and certainly their GTC (GPU Technology Conference) was most definitely not pale by comparison to any rock concert, as the premier event for AI innovators, developers, and enthusiasts.

Impact Shorts

More ShortsOnce held in local hotel ballrooms, in fifteen years it has grown to be the world’s most important tech conference, returning to its physical form after five years.

Generative AI promises to transform every industry it touches, and it just needed the technology to meet that challenge.

On Monday, Jensen Huang introduced that technology, the brand new Blackwell computing platform.

“I’m holding around ten billion dollars worth of equipment here,” said Huang, holding up a prototype of the Blackwell, adding that the next one will cost half that amount, pointing out that it just gets cheaper and more accessible from this point, henceforth.

The nature and economics of technological advancement. Putting a bunch of these chips together will now result in cranking out a level and intensity of computing power that has never been seen before.

The predecessor of Blackwell was called the Hopper. Blackwell is between two and thirty times faster, depending on how you measure it.

To make things clearer, Huang explained that earlier it took 8,000 GPUs, 15 megawatts, and 90 days to create the GPT-MoE-1.8T model, whereas with the new system you could just use 2,000 GPUs and a quarter of the power.

Nvidia also introduced a new set of tools for automakers working on self-driven cars and new tools for roboticists to enhance their robots. Huang’s favourite phrase through the address was “AI factory”.

“There’s a new industrial revolution happening in these (server) rooms. I call them AI factories,” he announced.

Huang also presented NVIDIA NIM, a new way of packaging and delivering software that connects developers with hundreds of millions of GPUs to deploy custom AI of all kinds.

Also, to bring AI into the physical world, Omniverse Cloud APIs were introduced to deliver advanced simulation capabilities.

The star of the tech opera was definitely the Blackwell chip, held up triumphantly by its proud father, Jensen Huang, dwarfing its older sibling, the Hopper, physically and otherwise.

Named after David Harold Blackwell, a University of California, Berkeley mathematician specialising in game theory and statistics and the first Black scholar to be inducted into the National Academy of Sciences, this new microarchitecture has surpassed its antecedent by leaps and bounds.

It delivers 2.5x its predecessor’s performance in FP8 for training and each chip, and 5x FP4 if we are theorising. It also features a fifth generation NVLink interconnect that’s twice as fast as the Hopper and scaled up to 576 GPUs.

The NVIDIA GB200 Blackwell Superchip connects two Blackwell NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU over a 900GB/s ultra-low power NVLink chip-to-chip interconnect.

Huang displayed a board with the system elucidating further: “This computer is the first of its kind where this much computing fits into this small of a space. Since this is memory coherent, they feel like it’s one big happy family working on one application together.”

He went on to elaborate on the amount of energy, time, and networking bandwidth that will be saved, which will transform the world of work and herald a new era.

Generative AI, which is believed to be the future is a type of AI that can create brand new content and ideas, including conversations, stories, images, videos and music.

Huang said, “The future is generative…which is why this is a brand new industry. We’ve created a processor for the generative AI era.”

For the common man, what all this essentially means is that the Blackwell is about four times faster than any previous AI supercomputing chip, which will inevitably lead to unprecedented, unparalleled advancements in pretty much any industry you can think of, be it robotics, medicine, AI, science, or others, at an insurmountable, never-before-seen speed.

The industry has already embraced Blackwell with open arms and a wide smile. The press release announcing Blackwell includes enthusiastic endorsements from luminaries including Alphabet and Google CEO Sundar Pichai, CEO Michael Dell, Microsoft CEO Satya Nadella, Open AI CEO Sam Altman, Oracle Chairman Larry Ellison, and the multifaceted celebrity CEO of our times, Elon Musk.

Blackwell is currently being adopted by every prominent global cloud services provider, pioneering AI companies, and server and system vendors around the world.

Our very own homegrown Mumbai-based data centre and on-cloud AI infrastructure provider, Yotta Data Services, headed by Sunil Gupta, who has already received their first batch of GPUs from Nvidia, is looking to raise funds to complete its order, which includes the Blackwell AI training GPU.

The Blackwell is expected to hit Indian shores by October. While tech, telecom, and other industries are already putting Blackwell to use, the greatest impact it will have is on healthcare.

Nvidia is already in imaging systems, in gene sequencing instruments and working with leading surgical robots companies.

It is safe to say 2020s will be the decade of AI and as the day of true artificial generative AI comes closer and closer, it is going to be only the companies who have laid the required groundwork, who will be in a position to keep up to serve all their pillars of digital transformation – people, customers, operations, finance and product innovation.

The author is a freelance journalist and features writer based out of Delhi. Her main areas of focus are politics, social issues, climate change and lifestyle-related topics. Views expressed in the above piece are personal and solely those of the author. They do not necessarily reflect Firstpost’s views.

)