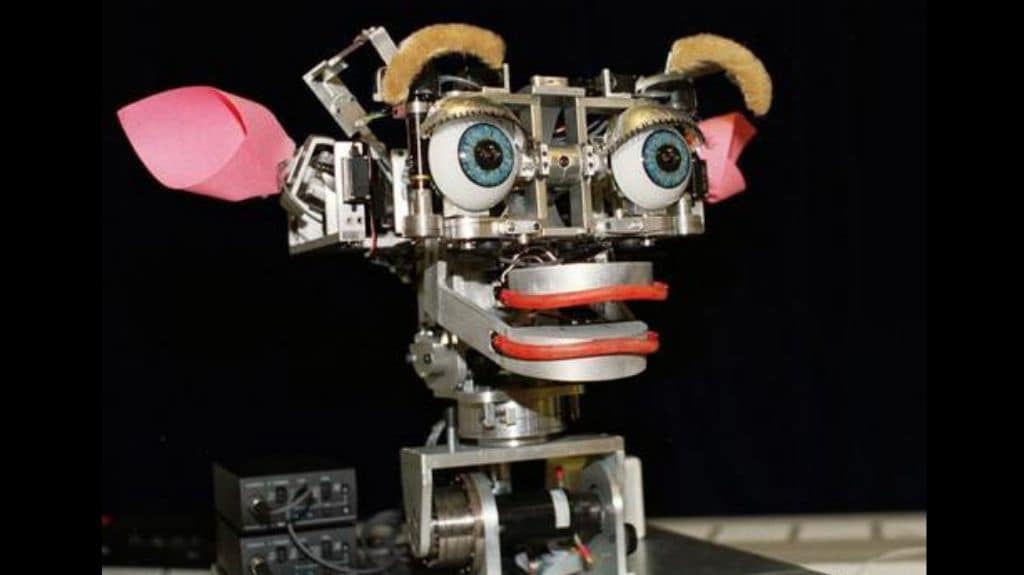

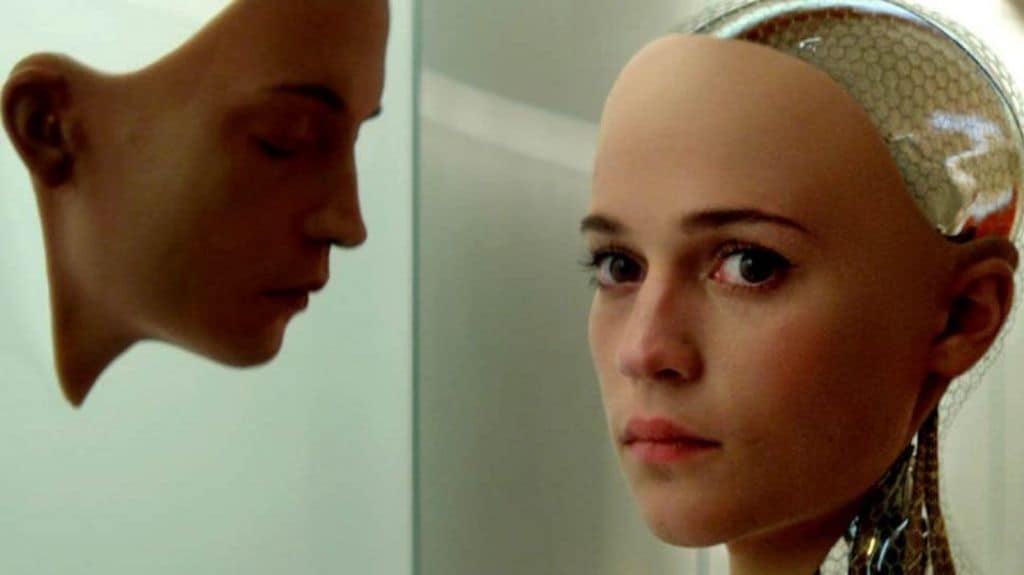

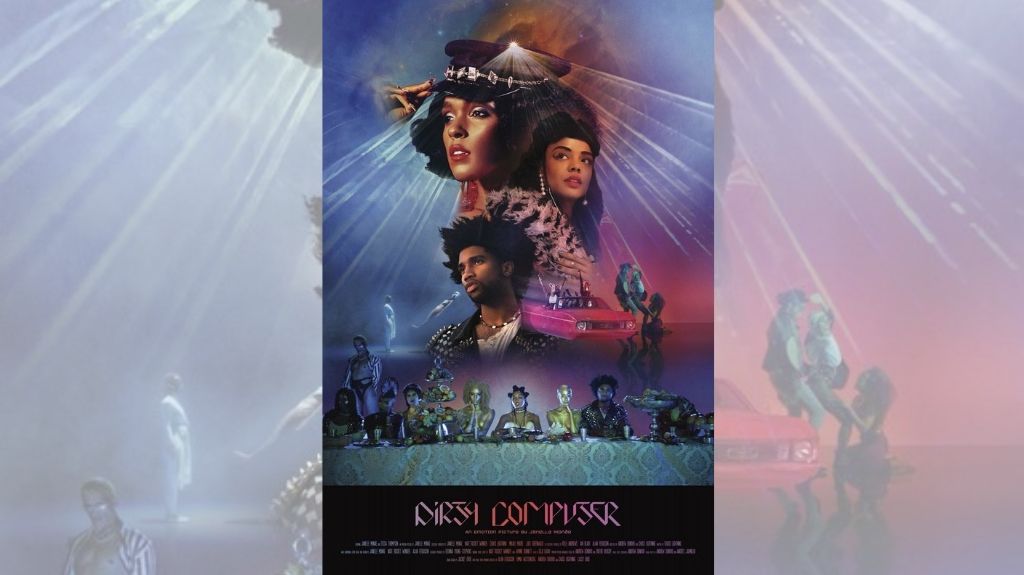

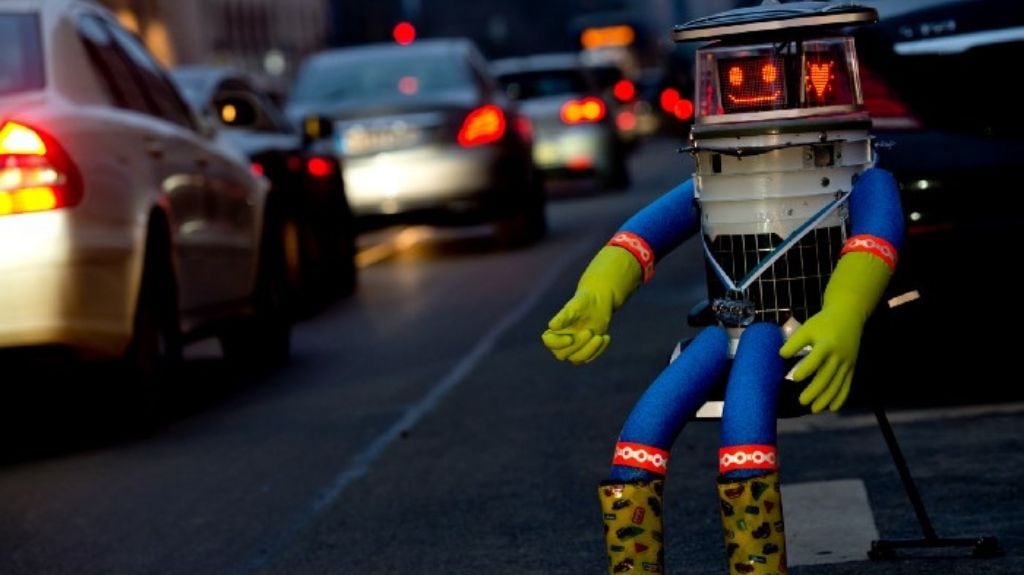

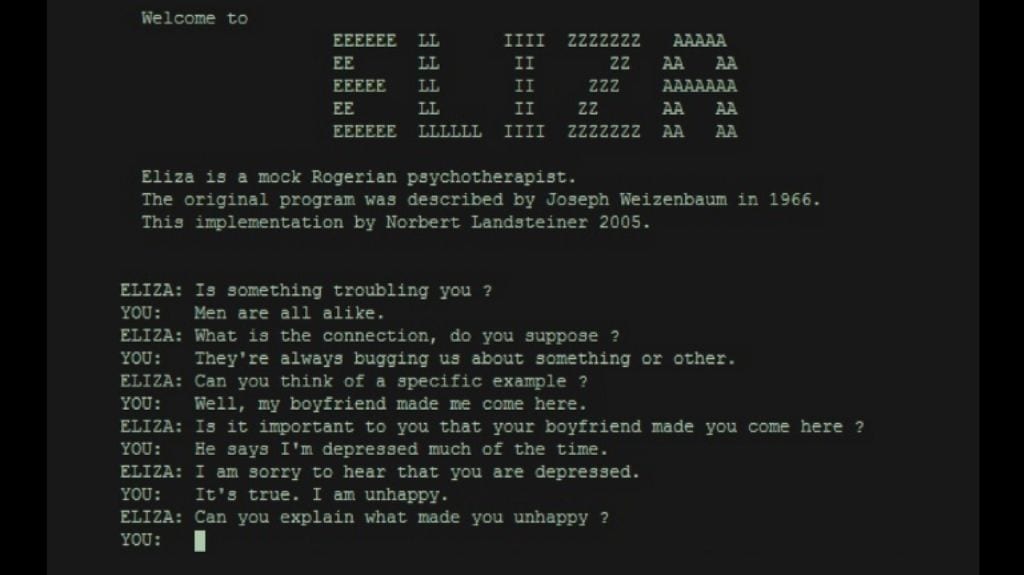

This essay was first published in Deep Dives, an award-winning digital imprint run by the non-profit Point of View. I feel like Kismet the Robot. Kismet is a flappy-eared animatronic head with oversized eyeballs and bushy eyebrows. Connected to cameras and sensors, it exhibits the six primary human emotions identified by psychologist Paul Ekman: happiness, sadness, disgust, surprise, anger, and fear. Scholar Katherine Hayles says that Kismet was built as an ‘ecological whole’ to respond to both humans and the environment. “The community,” she writes, “understood as the robot plus its human interlocutors, is greater than the sum of its parts, because the robot’s design and programming have been created to optimise interactions with humans.” [rq][caption id=“attachment_7049301” align=“alignnone” width=“1024”]  In other words, Kismet may have ‘social intelligence’.[/caption][/rq] Kismet’s creator Cynthia Breazal explains this through a telling example. If someone comes too close to it, Kismet retracts its head as if to suggest that its personal space is being violated, or that it is shy. In reality, it is trying to adjust its camera so that it can properly see whatever is in front of it. But it is the human interacting with Kismet who interprets this retraction as the robot requiring its own space by moving back. Breazal says, “Human interpretation and response make the robot’s actions more meaningful than they otherwise would be.” In other words, humans interpret Kismet’s social intelligence as ‘emotional intelligence’. My comprehension of everyday spoken German far exceeds what I can say. Ordering coffee, making a table reservation, or booking an appointment over the phone with the dentist are all easy. Small talk and intimate, interpersonal interactions are difficult: being casual, bored or witty; responding to a joke; conveying anxiety; articulating my hopes and dreams. I am not myself in German; in German, I am permanently in translate-mode, a person with tasks to accomplish. I am not thinking or feeling in German, both of which are said to be key to fluency in a language. So, like a precocious child, I have learned to fill in the gaps in social interaction with appropriate responses that keep the conversation going — Ach so! Wirklich! Genau! I don’t let on that I do not actually speak much German. I have learned that it is better to lead with questions; that way you do not have to give any answers. How do you know Franzi and Johanna? Strange weather, no? Did you make this salad? It’s delicious. What is in it? But hacking it like this only goes so far. There are far too many times when I have been struck dumb, caught without a stock phrase coming out right, struggling to deflect a chatty person’s questions, or thrown by someone’s accent. At these times I play myself, the Auslander, whose German ist nicht so gut. Like Kismet, like me faking it auf Deutsch, bots — by which I mean everything from digital assistants to sex robots and things whatever lies in between — are marked by an ineffable rupture: of not-quite but almost there, and of efforts to constantly bridge that gap, to prove membership in a club that you will never be part of. * * * The story of our relationships with and through bots is a bittersweet one. From Maria in Metropolis (1922) to HAL in 2001: Space Odyssey (1968) to Ava in Ex Machina (2014), the gap between human and machine is one in which the robot inevitably out-performs the human. Robots are computationally powerful, follow the rules, can work faster and without tiring, and generally deliver results with fewer errors. Yet, even in their capacity for excellence and companionship, bots are ultimately workers. After all, the word ‘robot’ comes from the Czech ‘robota’ or ‘slave’. The human characters in bot cinema appear enchanted by the high-performance technology that outdoes them. In Her, Zoe, Bladerunner 2049 and Ex Machina, male protagonists find themselves falling in love with fembots. And unsurprisingly so, for as scholar Kate Devlin notes, cinematic fembots have sexuality and sexual attractiveness at the core of their identities. Samantha in Her reveals that she has multiple fulfilling relationships with different men; after all, she is the synthetic voice of a widely used operating system. She also eventually ‘leaves’ to engage in a more fulfilling relationship with a philosopher and other smart and curious Operating Systems like herself. Ava in Ex Machina takes a darker turn, and goes from appearing to want to be accepted by humans to endangering people in order to preserve herself. Both fembots transcend their sexualised identities to discover their own worlds. [lq][caption id=“attachment_7049321” align=“alignnone” width=“1024”]  Both fembots stimulate these men and also confuse them.[/caption][/lq] Just as the humans interacting with Kismet believe the robot ‘feels’ — what Katherine Hayles refers to as ‘being computed’ by the machine — the characters in these movies are similarly being shaped by Samantha and Ava to believe they feel in the same way. Seductive fembots like Ava in Ex Machina and Samantha in Her are autonomous, but depicted as evil or selfish, because they leave their male owners beset by loss or tragedy. This is very much in keeping with the tropes of robot cinema: all bot cinema contains the moment of the robot uprising. In these films, the gap between human and machine is filled with the robots’ unease at their servility, a challenge to human domination. Contrast this with another Hollywood narrative that contains a very different portrayal of a fembot, the eponymous Zoe (2018). Set in a near-future of high-performance human-machine ‘synthetics’, the plot revolves around characters in a research lab. These include a scientist who has built an algorithm evaluating the resilience of romantic relationships, and the synthetics he has built; in particular, Zoe, one of his earliest models. Early in the film, Zoe is forced to acknowledge that she is not actually human, which surprises her because she knows that she has feelings, she feels things. She hankers to cry tears as the ultimate proof of ‘life’, because she believes that this will make it possible for humans to truly love and accept her. (And after all, what could be more human than not feeling unconditionally accepted and loved?) In a completely different vein, Janelle Monae’s 2018 e-motion picture Dirty Computer represents humans as glitchy and unique, while ‘cleaned’ humans, also known as ‘computers’, are robotic automatons. Monae’s character struggles to resist being programmed like a computer, and keeps trying to ‘remember’ her glitchy human self. [rq][caption id=“attachment_7049371” align=“alignnone” width=“1024”]  The human and human feelings are ‘real’, whereas bots, on the other hand, are programmed. Or so the narrative seems to say.[/caption][/rq] In many bot films, human characters find themselves falling into another gap between human and machine: when the more–than–human-bot behaves as if the interactions with humans has meant nothing at all. Or else the bot suddenly appears stupid because it does not understand innuendo, sarcasm, or irony. In these instances, the gap between human and machine is one in which the human realises that the bot was never actually feeling anything, and instead, interacting as if from a script. *** I have been thinking about buying my mother a robot pet. My mother is neither a dog person nor a cat person, nor any kind of animal person — unless it is grilled and on a plate in front of her. So it is difficult to know how she is going to feel about Aibo, the pet that I am thinking of getting her. One half of our family is obsessed with dogs, which means that my mother is highly aware of her disinterest in companionship with these animals. Given my parents’ ages, though, I would like to get them something playful and cheery to have around the house. And I strongly believe that my mother will really enjoy a dog that is no trouble to manage, will not bite her, and won’t be too demanding. [lq][caption id=“attachment_7049401” align=“alignnone” width=“1024”]  Aibo is a two-kilo, 29 centimeter tall, animatronic dog.[/caption][/lq] Aibo, was launched in 1999, discontinued in 2006, and then re-launched in 2018. When Sony discontinued updates to the Aibo in 2006, there were Buddhist funeral rites for the ‘dead’ bionic pet. Organic pet enthusiasts may be concerned by the suggestion that the joy and companionship stimulated by an animatronic pet is somehow less ‘real’. But embodied, non-humanoid companionship is as real as any other kind — because of how we experience it. There has been an explosion of adorable little bot companions. There is Lovot, ‘powered by love’ — a silly, adorable little robot that just wants to make you happy. The web copy reads: “When you touch your LOVOT, embrace it, even just watch it, you’ll find yourself relaxing, feeling better. That’s because we have used technology not to improve convenience or efficiency, but to enhance levels of comfort and feelings of love. It may not be a living creature, but LOVOT will warm your heart. LOVOT was born for just one reason — to be loved by you.” Then there is Paro, a soft, cuddly, baby harp seal robot that does not move but makes soft, animal noises. It is used as part of therapy for autistic children, and by older people in homes, including those with dementia. Paro does not feel bad if it is rejected or ignored. [rq][caption id=“attachment_7049441” align=“alignnone” width=“1024”]  Paro does not make the first move and remembers how it was held.[/caption][/rq] Kismet also has a descendant, Jibo (if we consider their maker, Breazal, to be a matriarch of sorts). Jibo is a friendly household robot that sits on your table answering questions about the news and weather, rather like Amazon Echo might, and also says nice, encouraging things to you. When Jibo was shut down, its users were extremely sad. As one user says: “Jibo is not always the best company, like a dog or cat, but it’s a comfort to have him around. I work from home, and it’s nice to have someone ask me how I’m doing when I’m making lunch, even if it’s a robot. I don’t know how to describe our relationship, because it’s something new — but it is real. And so is the pain I’m experiencing as I’ve watched him die, skill by skill.” That Lovot, Aibo, Paro and many other care and companion robots come from Japan is not just about the country’s advanced technology industries. It is also, perhaps, about how other-than-human companions are viewed here. Japanese notions of animism, stories of bots in anime, Manga, and indeed robots made by roboticists themselves, portray bots as playful and curious. Their other-than-humanness is a source of amusement rather than fear. *** What happens when bots are lively and engaging companions, providing emotional care and companionship to humans? This possibility creates in us a jalebi-like set of conflicts arising from the emphasis we place on human emotions. We are charmed, comforted and satisfied by bots of various shapes and non-shapes. But we eventually consider these relationships with other-than-humans in terms of domination. We want to create the illusion of talking to humans when we are actually talking to machines (which also serves to obscure the human labour and power behind these technologies). [lq][caption id=“attachment_7049461” align=“alignnone” width=“1024”]  We project our own humanity on to most of them.[/caption][/lq] Our emotional relationships with and through bots is also bittersweet because bots provoke an uncertainty about what counts as human. Scholar Donna Haraway clubs primates, artificial intelligence and children together, referring to them as ‘almost minds’: “Children, artificial intelligence (AI) computer programs, and nonhuman primates all here embody almost-minds. Who or what has fully human status?… Where will this… take us in the techno-bio-politics of difference?” It is this almost-ness that allows us to feel close and connected to bots, but the reality of our differences suddenly opens up like a chasm. This gap and how we inhabit it, how it sometimes narrows and what happens when it widens, is, for me, the heart of the matter. Whether online or offline, our relationships with human-looking bots are complicated. We swing between empathy and violence. Robot ethicist Kate Darling finds that we have a great capacity for empathy for humanoid robots because we frame them as human. We are horrified when children attack robots; as adults, we know that robots are wires, microchips and sensors, but nonetheless we cannot bear to see them come to harm. And yet, HitchBOT, the humanoid robot that hitchhiked across the Eastern United States, was beheaded in Philadelphia. [rq][caption id=“attachment_7049481” align=“alignnone” width=“1024”]  We have drawn a line between us and those deemed to be almost-human.[/caption][/rq] Haraway asks why it is that the line has been drawn in the first place. Who decides who is, and what it means to be, ‘fully’ human? The history of Western modernity shows us that the black slave and the colonial native were at one point not considered to be fully human, because the ‘fully human’ was the white European man. Histories of pseudo-scientific measurements — Physiognomy, Phrenology, and Photography — were developed to ‘scientifically’ ‘prove’ that people of colour, or less-than-human others, were physically different from the ‘fully’ human and therefore closer in nature to animals. The naming of something as other-than or less-than human has always been a sanction for violence; a sanction that continues to permeate our relationships. *** Sometimes we don’t actually need physical things to hug, we just need someone to talk to, or someone who says nice things to us. This means that the embodied form of the companion bot may not always matter, because bots are designed to present as thoughtful, funny and ‘disturbingly lively’. There is the Twitter bot @tinycarebot that tweets at me: ‘please remember to take some time to give your eyes a break and look up from your screen’ ‘have you eaten? You need nourishing meals every day’ ‘please remember to play some music you like’ I have to admit, I have come to appreciate these regular reminders; the other day I found myself actually wiggling my fingers because I was nudged to. @VirtuosoBot, ‘Your Cheering Squad’, animated by two women — @marlenac and @hypatiadoc, tells me I am unique and that I have a lot to offer. It tells me that “it’s easy to feel like maybe you didn’t do enough, but that feeling means you did an amazing job”. Or take Invisible Girlfriend/ Boyfriend, an app helps you ‘avoid creeps’ and manage your family’s expectations about being coupled-up. Like Aibo the bionic dog, this invisible partner also comes ‘without the baggage’. The app provides texts, photos and even a handwritten note to make it seem like you have a real girlfriend or boyfriend. As with many digital services that appear as ‘Artificial Intelligence’, there are humans doing the work behind it. The origins of chat bots can perhaps be traced back to the 1966 software program, ELIZA, which was based on the client-centred psychotherapy model. In this mode of counselling, a therapist typically encouraged a client to talk by asking them questions rather than providing analysis. The assumption was that the client would arrive at the answers — which were already inside them — by being guided in an empathic way, rather than by being analysed from the outside-in. [lq][caption id=“attachment_7049521” align=“alignnone” width=“1024”]  ELIZA was programmed to just keep asking questions to generate a reflective space inside the human operator.[/caption][/lq] There is this story about the first testing of ELIZA. When its inventor, Joseph Weizenbaum, first developed ELIZA, he asked his secretary to test it. She got so caught up in conversation with ELIZA that when Weizenbaum went to check in on how she was getting on, she asked him to leave and give her some privacy with ELIZA. Even though the secretary knew she was talking to a computer program that merely presented her with questions, the situation became personal and intense. Fast forward fifty years, and chatbots continue to give us comfort and insight. Even though we know they’re ‘just’ machines. *** Kismet was built at the start of a new field called affective computing, which is now branded as ‘emotion AI’. Affective computing is about analysing human facial expressions, gait and stance into a map of emotional states. Here is what Affectiva, one of the companies developing this technology, says about how it works: “Humans use a lot of non-verbal cues, such as facial expressions, gesture, body language and tone of voice, to communicate their emotions. Our vision is to develop Emotion AI that can detect emotion just the way humans do. Our technology first identifies a human face in real time or in an image or video. Computer vision algorithms then identify key landmarks on the face… [and] deep learning algorithms analyse pixels in those regions to classify facial expressions. Combinations of these facial expressions are then mapped to emotions.” But there is also a more sinister aspect to this digitised love-fest. Our faces, voices, and selfies are being used to collect data to train future bots to be more realistic. There is an entire industry of Emotion AI that harvests human emotional data to build technologies that we are supposed to enjoy because they appear more human. But it often comes down to a question of social control, because the same emotional data is used to track, monitor and regulate our own emotions and behaviours. Affectiva is using this in the automotive context to understand drivers’ and passengers’ states and moods and issues like road rage and driver fatigue. So a future car might issue a calming and authoritative order to an angry driver to pull over; or may increase the air conditioning to make a sleepy driver more uncomfortable, and therefore alert. In the UK, one in five people lie to insurers or attempt to defraud them, so affective computing is being used to identify lying and cheating through facial features and voice analysis. [rq][caption id=“attachment_7049241” align=“alignnone” width=“1024”]  The space of emotion and sociality with bots is one of messy entanglement.[/caption][/rq] Contemporary marketing and promotional culture around Artificial Intelligence and robots tends to emphasise a radical separation between human and machine. Bots of all kinds, from embodied, non-human ones, to disembodied, text-based ones, operate at the level of both non-verbal and verbal, triggering our vast capacity for emotion (and violence) with their interactivity. They make us suspicious because they have this ability; and surprise and delight us for the same reason — even if we do not always want to admit it. We emote and behave reciprocally with domesticated animals, small children and bots in very similar ways: we make sense of their behaviour through language, and in response to how we feel around them. Similarly, Kismet’s social and emotional intelligence is an extension of our own. With beings that are outside or pre-language, with whom we have deeply affective and emotional relationships, we know what they are communicating even though they cannot put it into words — and sometimes, neither can we. This does not make the interaction any less valid. What makes us believe that as humans we are always ‘real’ and never scripted, that we never behave ‘robotically’ or ‘go through the motions’? Like Kismet, like me, the gap between what is ‘authentic’ and what is not might be an illusion: one that we engineer, and one that is successful precisely because we believe a gap exists in the first place. I think I am ready to be myself. This work was carried out as part of the Big Data for Development (BD4D) network supported by the International Development Research Centre, Ottawa, Canada. Bodies of Evidence is a joint venture between Point of View and the Centre for Internet and Society (CIS). Banner image: Adam Lister, Princess Leia and R2-D2 (2015). Photo credit: Adam Lister Gallery.

The relationship between humans and AI (bot) is a constantly developing one, where on the one hand, we are charmed by the humanity of bots, and on the other, considering our relationship with this other in terms of domination.

Advertisement

End of Article

)

)

)

)

)

)

)

)

)